On AI, Abduction, and the Tacit Mind

For some time, a powerful critique has shadowed the rapid progress of Large Language Models (LLMs). Framed by the philosopher Charles Peirce’s triadic model of reasoning, the argument is compelling: today’s AI is a master of induction, a powerful generalizer of data, but it can only mimic true deduction and remains incapable of genuine abduction, the creative leap that sparks new ideas; what complexity theorist Dave Snowden aptly calls artificial inference. This view suggests a fundamental roadblock on the path to Artificial General Intelligence (AGI), a performance plateau that more data and processing power alone cannot overcome. However, recent breakthroughs, such as an AI achieving a gold-medal standard at the International Mathematical Olympiad, invite us to reconsider this narrative and examine the very nature of intelligence itself.

The power of the modern inductive engine deserves far greater recognition. With access to a near-totality of human codified knowledge, an LLM can simulate deductive reasoning with astonishing effectiveness. A problem like proving Schur’s inequality (as posed in the first part of the third question of the British Mathematical Olympiad 1977), which would require significant ingenuity from a human student, becomes a trivial act of recall for a machine that recognizes the pattern. This capability blurs the practical distinction between genuine understanding and sophisticated mimicry. For a vast majority of intellectual tasks, an AI’s ability to flawlessly execute known patterns at superhuman speed makes it functionally superior to most human experts, challenging our traditional benchmarks for reasoning.

This performance differential highlights a fundamental architectural difference between human and artificial cognition. Human intelligence, even at its most brilliant, is “spiky.” The legend of a future Fields Medalist such as Alain Connes, stumped by the once-in-a-millennium difficulty of the 1966 Koszul exam illustrates that human genius is not a constant state but, as philosopher Alain de Botton frames it about wisdom, a fragile achievement made of brilliant, intermittent peaks. We are limited by biology, fatigue, and memory. In stark contrast, an LLM is “constant.” It is a tireless, relentless engine of logic with perfect recall of learned patterns, capable of exploring trillions of possibilities without inspiration or rest. This constancy is a new kind of intellectual force, one whose sheer scale and speed can produce results that are indistinguishable from, or superior to, human insight.

Yet, the source of our human “spikes” remains a critical, and perhaps uniquely biological, phenomenon. These peaks of insight are often the product of abduction, driven by the incubation effect famously chronicled by Henri Poincaré. After intense conscious effort (preparation), the human mind sets a problem aside, allowing unconscious processes to work. The solution often arrives in a sudden flash of illumination—a shower thought, a midnight insight—that feels disconnected from the preceding effort. This interplay between focused work and subconscious incubation is a powerful engine of our creativity, producing “black swan” ideas that propel humanity forward. It is a process that today’s “always-on” AI, which lacks a subconscious mind, cannot replicate.

This brings us to what may be the most profound roadblock for artificial intelligence: embodied cognition. The challenge is best understood through the lens of tacit versus explicit knowledge. As philosopher Michael Polanyi famously stated, “we can know more than we can tell.” LLMs are the undisputed masters of the explicit world: the universe of text, data, and facts that can be written down. Their power comes from synthesizing this domain at an unimaginable scale.

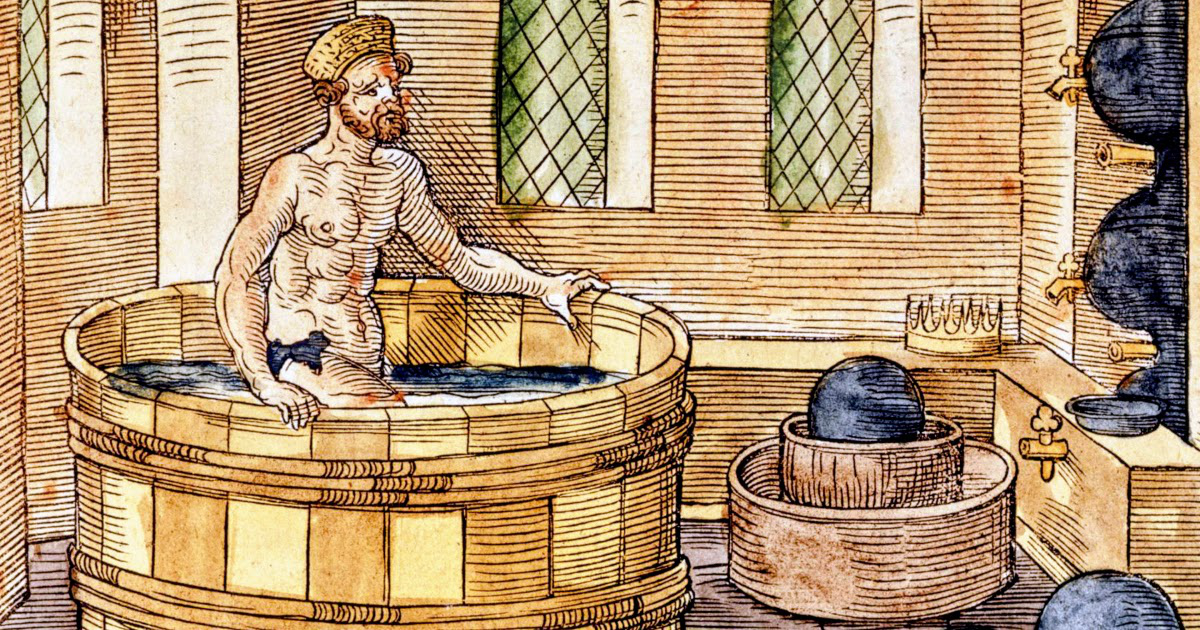

Human expertise, however, is built upon a vast foundation of tacit knowledge, the intuitive “know-how” that cannot be articulated. It is the knowledge needed to balance a bicycle or the wisdom to read a room, skills acquired only through physical experience and sensory feedback. This is the same barrier that caused the expert systems of the 1970s to fail; they could not capture the inarticulable wisdom of their human counterparts. Thus, the ultimate challenge for AGI is not just about replicating a style of reasoning, but about accessing an entire domain of knowledge that may be forever closed to a disembodied mind.

Perhaps the road to AGI will not be paved with more data, as Yann LeCun often voices, but with a deeper understanding of what it means to know without telling.

References

- Axel Arno. (2022). L’épreuve de mathématique la plus terrifiante (ENS 1966). youtube.com/watch?v=WQIEOBjTQ1o.

- British Mathematical Olympiad 1977. bmos.ukmt.org.uk/home/bmo-1977.pdf.

- Alain De Botton. (2019). The School of Life: An Emotional Education.

- Yann LeCun. (2025). The Shape of AI to Come! Yann LeCun at AI Action Summit 2025. youtube.com/watch?v=xnFmnU0Pp-8.

- Thang Luong and Edward Lockhart. (2025). Advanced version of Gemini with Deep Think officially achieves gold-medal standard at the International Mathematical Olympiad. deepmind.google/discover/blog/advanced-version-of-gemini-with-deep-think-officially-achieves-gold-medal-standard-at-the-international-mathematical-olympiad/.

- Charles Peirce. (1992). The Essential Peirce, Volume 1 (1867–1893): Selected Philosophical Writings.

- Henri Poincaré. (2007). Science and Method.

- Michael Polanyi. (1966). The Tacit Dimension.

- Voicecraft. (2025). E131| Artificial Intelligence & Human Reasoning | Metacrisis vs Polycrisis | Dave Snowden & Tim Adalin. podcasts.apple.com/de/podcast/voicecraft/id1271039497?l=en-GB&i=1000718857654.